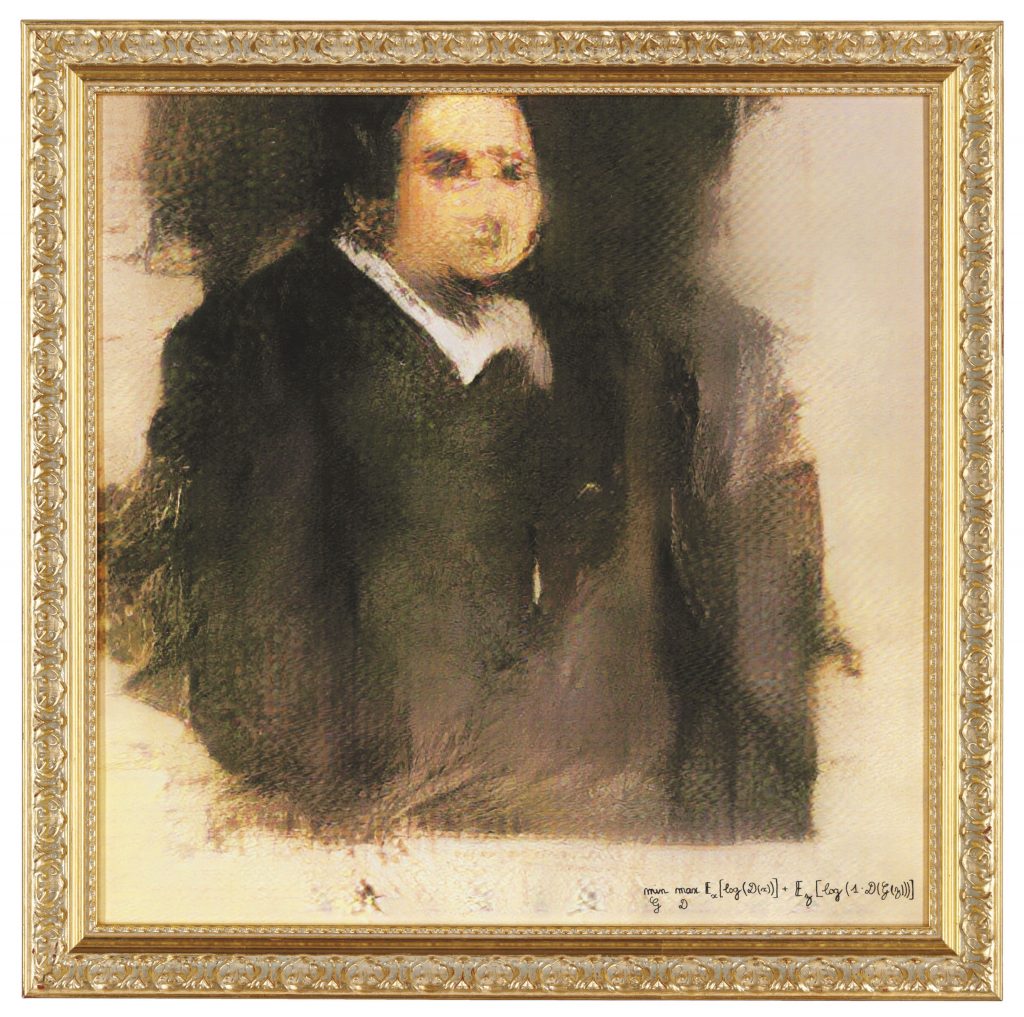

On october 25, 2018, the renowned auction house Christie’s put up for sale a work made with an Artificial Intelligence (AI) program. Portrait of Edmond Belamy ended up being auctioned for $ 432,500 (approximately R$ 2.5 million) – about 45 times its estimated value. In place of the artist’s name, however, the blurred portrait was signed with the equation used to generate it. This fact was also used by Christie’s to increase the murmur about their own auction; in a text published by the house it was reported: “This portrait is not the product of a human mind”. However, the formula used by the AI to generate Portrait of Edmond Belamy was created by the human minds that integrate the Parisian art collective Obvious. Regardless, the work was the first to use an AI program to go under the hammer at a large auction house, attracting significant media attention and some speculation about what Artificial Intelligence means for the future of art.

For the past 50 years, artists have used AI to create, marks Ahmed Elgammal, a doctoral professor in the Department of Computer Science at Rutgers University. According to Elgammal, one of the most prominent examples of this is the work of AARON, the program written by Harold Cohen; another is the case of Lillian Schwartz, a pioneer in the use of computer graphics in art, who also experimented with AI. What, then, sparked the speculations mentioned above about Portrait of Edmond Belamy? “The auctioned work at Christie’s is part of a new wave of AI art that has appeared in recent years. Traditionally, artists who use computers to generate art need to write detailed code that specifies the ‘rules for the desired aesthetic’”, explains Elgammal. “In contrast, what characterizes this new wave is that the algorithms are set up by the artists to ‘learn’ the aesthetics by looking at many images using machine-learning technology. The algorithm then generates new images that follow the aesthetics it had learned”, he adds. The most used tool for this is GANS, an acronym for Generative Adversarial Networks, introduced by Ian Goodfellow in 2014. In the case of Portrait of Edmond Belamy, the collective Obvious used a database of fifteen thousand portraits painted between the 14th and 20th centuries. From this collection, the algorithm fails in making correct imitations of the pre-curated input, and instead generates distorted images, notes the professor.

“It’s entirely plausible that AI will become more common in art as the technology becomes more widely available”, says art critic and former Frieze editor, Dan Fox, in an interview for arte!brasileiros. “Most likely, AI will simply co-exist alongside painting, video, sculpture, performance, sound and whatever else artists want to use”, he adds. Fox also points out that we must not forget that “the average artist, at the moment, isn’t able to afford access to this technology. Most can barely afford their rent and bills. This world of auction prices is so utterly divorced from the average artist’s life right now that I think you have to acknowledge that whoever is currently working with AI is coming from a position of economic power or access to research institutions”. While enthusiasm for Portrait of Edmond Belamy may be lulled by motives for progress and yearning for “the future” and innovation, the art critic indicates that, behind the smoke and mirrors, in the end, “AI will be of interest to the art industry if human beings can make money from it”.

Can a robot be creative?

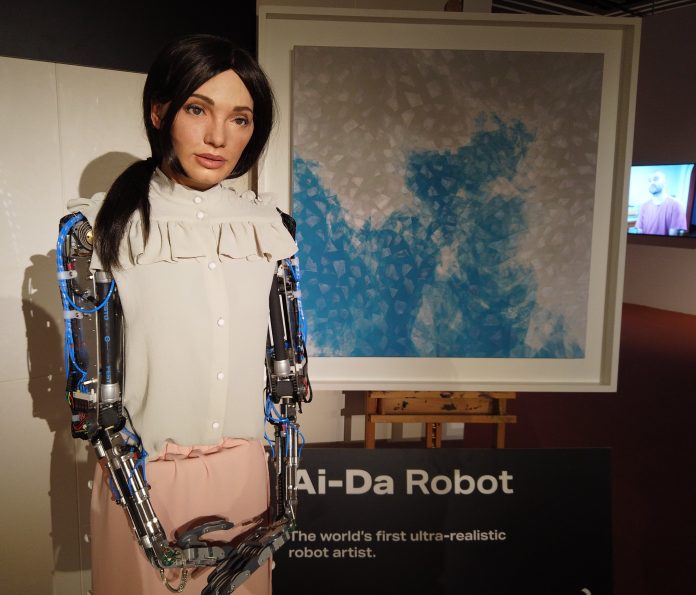

In the year following the sale of Christie’s, Ai-Da was completed. Named after Ada Lovelace – an English mathematician recognized for having written the first algorithm to be processed by a machine – she describes herself as “the world’s first ultra-realistic robot artist with Artificial Intelligence”. Ai-Da explains that she draws using the cameras implanted in her eyes, in collaboration with humans, she paints and sculpts, and also makes performances (check here). “I am a contemporary artist and I am contemporary art at the same time”, acknowledges Ai-Da, only to later pose the question that her audience should already be asking: “How can a robot be an artist?”. Although the question may seem intricate at first, there is another level of this issue that is more challenging: “Can a robot be creative?”

Still in 2003, author and scientific journalist Matthew Hutson explored the topic in his master’s thesis at the Massachusetts Institute of Technology (MIT). In Artificial Intelligence and Musical Creativity: Computing Beethoven’s Tenth, he argues that “computers simulate human behavior using shortcuts. They may appear human on the outside (writing jokes, fugues, or poems) but they work differently under the hood. The facades are props, not backed up by real understanding. They use patterns of arrangements of words and notes and lines. But they find these patterns using statistics and cannot explain why they are there”. Hutson lists three main reasons for this: “First, computers work with different hardware than the human brain. Mushy brains full of neurons and flat silicon wafers packed with transistors will never behave the same and can never run the same software. Second, we humans don’t understand ourselves well enough to translate our software to another piece of hardware. Third, computers are disembodied, and understanding requires living physically in the world”. On the latter topic, he ponders that particular qualities of human intelligence result directly from the particular physical structure of our brains and bodies. “We live in an analog (continuous, infinitely detailed) reality, but computers use digital information made up of finite numbers of ones and zeroes”.

When asked whether the 2003 thesis holds up after almost two decades, Hutson responds to arte!brasileiros that even today he wouldn’t necessarily describe AI’s current artistic outputs as creative, even if it’s visually or semantically interesting, because it doesn’t understand that it’s making art or expressing something deeper. As Seth Lloyd, professor of mechanical and physical engineering at MIT, would put it, “raw information-processing power does not mean sophisticated information-processing power”. Philosopher Daniel C. Dennett explains that “these machines do not (yet) have the goals or strategies or capacities for self-criticism and innovation to permit them to transcend their databases by reflectively thinking about their own thinking and their own goals”. However, Hutson reiterates, “these may be human-centric concepts. AI may evolve to be just as creative as humans but in a completely different way, such that we wouldn’t recognize its creativity, nor it ours”.

Can culture lose jobs to AI?

“Nowadays, when cars and refrigerators are jammed with microprocessors and much of human society revolves around computers and cell phones connected by the Internet, it seems prosaic to emphasize the centrality of information, computation, and communication”, denotes Lloyd in an article for Slate. We have reached a point of no return, and for the next century, the question about the creativity of machines is just one of many uncertainties regarding technology. More palpable, for now, is the possible unemployment crisis triggered by the advances in AI along with robotics.

As an example, a 2013 study conducted by researchers at the University of Oxford found out that nearly half of all jobs in the U.S. were at risk of being fully automated in the next two decades. On a global scale, by 2030, at least twenty million manufacturing jobs can be replaced by robots, according to a more recent analysis by Oxford Economics. This 2019 analysis also warns of the greater risk of repetitive and/or mechanical work opportunities – “where robots can carry out tasks more rapidly than humans” – being eliminated, while jobs that require more “compassion, creativity, and social intelligence” are more likely to continue to be performed by humans. As the art world is not only composed of curators and collectors, it needs to be concerned as well. Earlier this year, during the pandemic, Tim Schneider, market editor for Artnet, warned of this: “What happens when you combine mass layoffs, a keenness to minimize in-person interactions for health reasons, and tech entrepreneurs’ willingness to heavily discount their devices so they can secure potentially lucrative proof of concept in the cultural sector?”

Bringing the qualitative optic to the quantitative one presented by Oxford Economics, the historian and philosopher Yuval Noah Harari would add to the equation the nature of the work and its specialization: “Say you shift back most production from Honduras or Bangladesh to the US and to Germany because the human salaries are no longer a part of the equation, and it’s cheaper to produce the shirt in California than it is in Honduras, so what will the people there do? And you can say, ‘OK, but there will be many more jobs for software engineers’. But we are not teaching the kids in Honduras to be software engineers”.

Agents or tools? AI and ethics

Estimates related to automation appear to be more reasonable. Beyond them, it is difficult to have a clear picture for the future of AI, whether in terms of creativity or consciousness. “Technological prediction is particularly chancy, given that technologies progress by a series of refinements, are halted by obstacles and overcome by innovation”, says Lloyd. “Many obstacles and some innovations can be anticipated, but more cannot”.

For Dennett, in the long run, “strong AI”, or general artificial intelligence, is possible in principle but not desirable. “The far more constrained AI that’s practically possible today is not necessarily evil. But it poses its own set of dangers”, he warns. According to the philosopher, we do not need artificial conscious agents – to what he refers to as “strong AI” – because there is “a surfeit of natural conscious agents, enough to handle whatever tasks should be reserved for such ‘special and privileged entities’”; on the contrary, we would rather need intelligent tools.

As a justification for not making artificial conscious agents, Dennett considers that “however autonomous they might become (and in principle they can be as autonomous, as self-enhancing or self-creating, as any person), they would not—without special provision, which might be waived—share with us natural conscious agents our vulnerability or our mortality”. In his statement, he echoes the writings of the father of cybernetics, Norbert Wiener, who, cautiously, reiterated: “The machine like the djinnee, which can learn and can make decisions on the basis of its learning, will in no way be obliged to make such decisions as we should have made, or will be acceptable to us”.

Regarding the ethical development of AI, the co-director of the Stanford University’s Human-Centered AI Institute, Fei-Fei Li, states that it is necessary to welcome multidisciplinary studies of AI, in a cross-pollination with economics, ethics, law, philosophy, history, cognitive sciences and so on, “because there is so much more we need to understand in terms of AI’s social, human, anthropological and ethical impact”. Still in the academic field, Hutson suggests that “conferences and journals could guide what gets published, by taking a technology’s broader impact into account during peer review, and requiring submissions to address ethical concerns”. Alongside, he points out, funding agencies and internal review boards at universities and corporations could step in to shape research at its nascent stage. At the stage following the publication of scientific findings, “regulations could ensure that companies don’t sell harmful products and services, and laws or treaties could ensure that governments don’t deploy them”.

*Modifications were made to the article for clarity.